-

Social Media Analytics

Social Media Analytics

Why Social Media Matters for Brands

Insights Gleaned from Social Media Platforms

Strengths of Social Media Data

Limitations of Social Media Data

Understanding Social Data

Social Media Platforms: Key Features

Structured and Unstructured-Data

Social Data Mining

Social Data Mining Process

Social Data Mining Techniques

Social Data Mining Challenges

Application Programming Interfaces

How APIs Work

Working with APIs

Endpoints

Twitter (X) API

Twitter (X) API — Securing Access

Twitter (X) REST API in Python

Facebook API

Facebook Graph API

Facebook API — Securing Access

Facebook API in Python

Advantages and Limitations of APIs

Data Cleaning Techniques

Natural Language Processing

Natural Language Toolkit (NLTK)

Social Media Data Types

Textual Data Encoding

Text Processing Techniques

Tokenization

Word Tokenization

Character Tokenization

Sub-Word Tokenization

Stemming and Lemmatization

Stemming

Lemmatization

Stemming and Lemmatization in Python

N-grams, Bigrams, and Trigrams

Applications of N-grams

Applications of N-grams in Sentiment Analysis

Topic Modelling with N-grams

Vectorization

Bag-of-Words

TF-IDF Vectorizer

Facebook Brand Page Analysis

Extracting Insights from Facebook Brand Pages

Facebook — Social Data Analysis Process

Facebook — Data Extraction

Text Analytics

Text Analytics Process

Part of Speech (POS) Tagging

Noun Phrases

Text Data Processing in Python

Word Cloud (FB data) in Python

Time Series Analysis and Visualization of FB Comments

Emotion Analysis

IBM Watson Natural Language Understanding

Accessing IBM Cloud Services

Emotion Analysis Using Watson NLU

Sentiment Analysis

Forms of Sentiment Analysis

Types of Sentiment Analysis

Visual Sentiment Analysis and Facial Coding

Applications of Facial Coding

Sentiment Analysis in Text

Analysis of Behaviours and Sentiments

Sentiment Analysis Process

Sentiment Analysis — Classification

VADER Classifier

Standard Sentiment Analysis

Customised Sentiment Analysis

Model Validation – Confusion Matrix

K-fold Cross-validation

Named Entity Recognition (NER)

NER Process Overview

Stanford NER

Challenges in NER

Stanford NER Implementation in Python

Web Scraping

Web Scraping Techniques

Applications of Web Scraping

Legal and Ethical Considerations

Beautiful Soup

Scraping Quotes to Scrape

Scraping of Fake Jobs Webpage

Scrapy

Scrapy Concepts

Scrapy Framework

Scrapy Limitations

Beautiful Soup vs. Scrapy — A Comparison

Selenium

Topic Modelling

Topic Modelling — Illustration

Topic Modelling Techniques

Topic Modelling Process

Latent Dirichlet Allocation (LDA) Model

Topic Modelling Tweets with LDA in Python

Social Influence on Social Media

Key Forms of Social Influence

Social Influence on Social Media Platforms

Examples of Organized Social Influence

Social Network Analysis

Topic Networks and User Networks

Online Social Networks — The Basics

Analysing Topic Networks

Centrality Measures

Degree Centrality

Betweenness Centrality

Closeness Centrality

Eigenvector Centrality

Use Case — Marketing Analytics Topic Network

Social Network Analysis Process

SNA — Uncovering User Communities

Appendix — Python Basics: Tutorial

Installation — Anaconda, Jupyter and Python

Python Syntax

Variables

Data Types

If Else Statement

While and For Loops

Functions (def)

Lambda Functions

Modules

JSON

Python Requests — get(), json()

User Input

Exercises

Appendix — Python Pandas

Basic Usage

Reading, Writing and Viewing Data

Data Cleaning

Other Features

Appendix — Python Visualization

Matplotlib

Matplotlib — Basic Plotting

NumPy

Matplotlib — Beyond Lines

Analysis and Visualization of the Iris Dataset

Word Clouds

Word Cloud in Python

Seaborn — Statistical Data Visualization

Seaborn Visualization in Python

Appendix — Scrapy Tutorial

Creating a Project

Writing a Spider

Running the Spider

Extracting Data

Extracting Data — CSS Method

Extracting Data — XPath Method

Extracting Quotes and Authors

Extracting Data in Spider

Storing the Scraped Data

Pipeline

Following Links

Appendix — HTML Basics

HTML Tree Structure, Tags and Attributes

Tags

Attributes

My First Webpage

- New Media

- Digital Marketing

- YouTube

- Social Media Analytics

- SEO

- Search Advertising

- Web Analytics

- Execution

- Case — Prop-GPT

- Marketing Education

- Is Marketing Education Fluffy and Weak?

- How to Choose the Right Marketing Simulator

- Self-Learners: Experiential Learning to Adapt to the New Age of Marketing

- Negotiation Skills Training for Retailers, Marketers, Trade Marketers and Category Managers

- Simulators becoming essential Training Platforms

- What they SHOULD TEACH at Business Schools

- Experiential Learning through Marketing Simulators

-

MarketingMind

Social Media Analytics

Social Media Analytics

Why Social Media Matters for Brands

Insights Gleaned from Social Media Platforms

Strengths of Social Media Data

Limitations of Social Media Data

Understanding Social Data

Social Media Platforms: Key Features

Structured and Unstructured-Data

Social Data Mining

Social Data Mining Process

Social Data Mining Techniques

Social Data Mining Challenges

Application Programming Interfaces

How APIs Work

Working with APIs

Endpoints

Twitter (X) API

Twitter (X) API — Securing Access

Twitter (X) REST API in Python

Facebook API

Facebook Graph API

Facebook API — Securing Access

Facebook API in Python

Advantages and Limitations of APIs

Data Cleaning Techniques

Natural Language Processing

Natural Language Toolkit (NLTK)

Social Media Data Types

Textual Data Encoding

Text Processing Techniques

Tokenization

Word Tokenization

Character Tokenization

Sub-Word Tokenization

Stemming and Lemmatization

Stemming

Lemmatization

Stemming and Lemmatization in Python

N-grams, Bigrams, and Trigrams

Applications of N-grams

Applications of N-grams in Sentiment Analysis

Topic Modelling with N-grams

Vectorization

Bag-of-Words

TF-IDF Vectorizer

Facebook Brand Page Analysis

Extracting Insights from Facebook Brand Pages

Facebook — Social Data Analysis Process

Facebook — Data Extraction

Text Analytics

Text Analytics Process

Part of Speech (POS) Tagging

Noun Phrases

Text Data Processing in Python

Word Cloud (FB data) in Python

Time Series Analysis and Visualization of FB Comments

Emotion Analysis

IBM Watson Natural Language Understanding

Accessing IBM Cloud Services

Emotion Analysis Using Watson NLU

Sentiment Analysis

Forms of Sentiment Analysis

Types of Sentiment Analysis

Visual Sentiment Analysis and Facial Coding

Applications of Facial Coding

Sentiment Analysis in Text

Analysis of Behaviours and Sentiments

Sentiment Analysis Process

Sentiment Analysis — Classification

VADER Classifier

Standard Sentiment Analysis

Customised Sentiment Analysis

Model Validation – Confusion Matrix

K-fold Cross-validation

Named Entity Recognition (NER)

NER Process Overview

Stanford NER

Challenges in NER

Stanford NER Implementation in Python

Web Scraping

Web Scraping Techniques

Applications of Web Scraping

Legal and Ethical Considerations

Beautiful Soup

Scraping Quotes to Scrape

Scraping of Fake Jobs Webpage

Scrapy

Scrapy Concepts

Scrapy Framework

Scrapy Limitations

Beautiful Soup vs. Scrapy — A Comparison

Selenium

Topic Modelling

Topic Modelling — Illustration

Topic Modelling Techniques

Topic Modelling Process

Latent Dirichlet Allocation (LDA) Model

Topic Modelling Tweets with LDA in Python

Social Influence on Social Media

Key Forms of Social Influence

Social Influence on Social Media Platforms

Examples of Organized Social Influence

Social Network Analysis

Topic Networks and User Networks

Online Social Networks — The Basics

Analysing Topic Networks

Centrality Measures

Degree Centrality

Betweenness Centrality

Closeness Centrality

Eigenvector Centrality

Use Case — Marketing Analytics Topic Network

Social Network Analysis Process

SNA — Uncovering User Communities

Appendix — Python Basics: Tutorial

Installation — Anaconda, Jupyter and Python

Python Syntax

Variables

Data Types

If Else Statement

While and For Loops

Functions (def)

Lambda Functions

Modules

JSON

Python Requests — get(), json()

User Input

Exercises

Appendix — Python Pandas

Basic Usage

Reading, Writing and Viewing Data

Data Cleaning

Other Features

Appendix — Python Visualization

Matplotlib

Matplotlib — Basic Plotting

NumPy

Matplotlib — Beyond Lines

Analysis and Visualization of the Iris Dataset

Word Clouds

Word Cloud in Python

Seaborn — Statistical Data Visualization

Seaborn Visualization in Python

Appendix — Scrapy Tutorial

Creating a Project

Writing a Spider

Running the Spider

Extracting Data

Extracting Data — CSS Method

Extracting Data — XPath Method

Extracting Quotes and Authors

Extracting Data in Spider

Storing the Scraped Data

Pipeline

Following Links

Appendix — HTML Basics

HTML Tree Structure, Tags and Attributes

Tags

Attributes

My First Webpage

- New Media

- Digital Marketing

- YouTube

- Social Media Analytics

- SEO

- Search Advertising

- Web Analytics

- Execution

- Case — Prop-GPT

- Marketing Education

- Is Marketing Education Fluffy and Weak?

- How to Choose the Right Marketing Simulator

- Self-Learners: Experiential Learning to Adapt to the New Age of Marketing

- Negotiation Skills Training for Retailers, Marketers, Trade Marketers and Category Managers

- Simulators becoming essential Training Platforms

- What they SHOULD TEACH at Business Schools

- Experiential Learning through Marketing Simulators

Word Cloud in Python

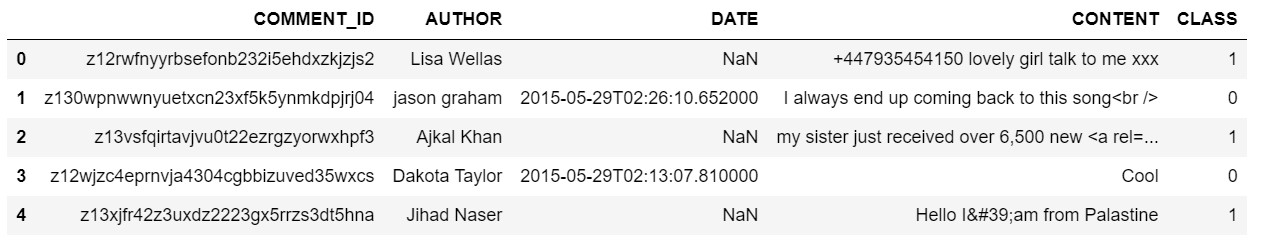

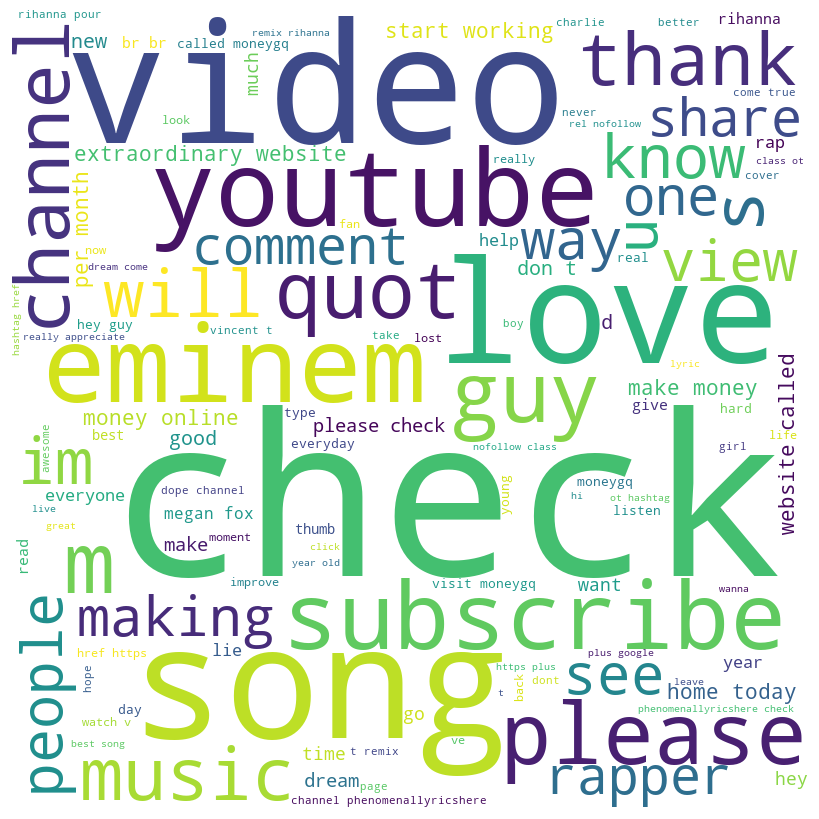

Exhibit 25.60 provides the Python code for generating word cloud for the YouTube data stored in the file youtube_comments.csv. Here is a breakdown of what it does:

- Importing Libraries:

wordcloud: This library provides tools for creating word clouds.matplotlib.pyplot: This library helps visualize the word cloud.

- YouTube Data File: The code opens the

youtube_comments.csvtable and reads it into the dataframe variabledf. - Extract Words from YouTube Comments: Extract words from the CONTENT field and save into string variable

comment_words. - Setting Up Stop Words: The code imports a set of common words considered unimportant for analysis, like “the”, “and”, “a”, etc. These are stored in

stopwords. - Creating the Word Cloud:

WordCloudis called to create an image object. We specify:- Size:

widthandheightare set to 800 pixels, making it an 800 × 800 image. - Background:

background_coloris set to “white” for a clean background. - Stop Words: We tell the word cloud to exclude the stop words defined earlier using

stopwords. - Minimum Font Size:

min_font_sizeis set to 10 to ensure all words are at least visible. generate(comment_words): This line takes the words from comment_words and uses it to create the word cloud.

- Size:

- Visualizing the Word Cloud:

plt.figure: Creates a new figure for displaying the image.plt.imshow(wordcloud): This displays the generated word cloud on the figure.plt.axis("off"): Hides the x and y axes since they are not relevant for the word cloud.plt.tight_layout(pad = 0): Adjusts spacing to ensure the word cloud fills the entire area without extra padding.plt.show(): Finally, this line displays the generated word cloud image on your screen.

Import Modules and Read Data

# importing all necessary modules

from wordcloud import WordCloud, STOPWORDS # for generation word cloud

import matplotlib.pyplot as plt # for visualization of data

import pandas as pd # panel data analysis/python data analysis

import nltk # natural language toolkit

# Read 'youtube_comments.csv' file - containing data from YouTube

df = pd.read_csv("data/youtube_comments.csv")

df[:5] # print first records

Extract Tokens into a word string 'comment_words'

stopwords = set(STOPWORDS) # Convert stop words list into a set.

# Extract words from the CONTENT field and save into string variable comment_words

comment_words = ''

for val in df.CONTENT: # iterate through the table's CONTENT field

# typecast each val to string

val = str(val)

# split the text in val and save the words into tokens

tokens = val.split() # list of words from CONTENT field

# Converts each token in the tokens to lowercase

for i in range(len(tokens)):

tokens[i] = tokens[i].lower()

# Join (i.e. concatenate) all tokens separate by blank spaces

comment_words += " ".join(tokens)+" " # this will create a large string of tokens

print(comment_words[:1000]) # print the first 1000 characters in comment_words

my sister just received over 6,500 new #active youtube views right now. the only thing she used was pimpmyviews. com cool hello i'am from palastine wow this video almost has a billion views! didn't know it was so popular go check out my rapping video called four wheels please ❤️ almost 1 billion aslamu lykum... from pakistan eminem is idol for very people in españa and mexico or latinoamerica help me get 50 subs please i love song :) alright ladies, if you like this song, then check out john rage. he's a smoking hot rapper coming into the game. he's not better than eminem lyrically, but he's hotter. hear some of his songs on my channel. the perfect example of abuse from husbands and the thing is i'm a feminist so i definitely agree with this song and well...if i see this someone

Generate Wordcloud

# Generate an 800 X 800 wordcloud image from the tokens in comment_words

stopwords = set(STOPWORDS) # Convert stop words list into a set.

wordcloud = WordCloud(width = 800, height = 800,background_color ='white', stopwords = stopwords,

min_font_size = 10).generate(comment_words)

# plot the WordCloud image

plt.figure(figsize = (8, 8), facecolor = None)

plt.imshow(wordcloud)

plt.axis("off")

plt.tight_layout(pad = 0)

plt.show()

Exhibit 25.60 This code demonstrates how to generate a word cloud of the comments from a csv data file containing YouTube posts. Jupyter notebook.

This code processes the comments in a YouTube data file, removes unimportant words (stopwords), and then creates a visual representation of the comments where word size reflects how often it appears in the text.

Another coding example for generating a word cloud is provided in section Word Cloud (FB data) in Python.

Note that you can also use online resources such Word Cloud Generator to quickly generate word clouds.

Previous Next

Use the Search Bar to find content on MarketingMind.

Contact | Privacy Statement | Disclaimer: Opinions and views expressed on www.ashokcharan.com are the author’s personal views, and do not represent the official views of the National University of Singapore (NUS) or the NUS Business School | © Copyright 2013-2026 www.ashokcharan.com. All Rights Reserved.