-

Biometrics

Biometrics

Eye Tracking

How it Works

Devices

Metrics

Fixations and Saccades

Heat Maps

Pupillometry

Eye Language

Applications

Electroencephalography

Frequency-based Analysis

Metrics

Approach-Avoidance

Cognitive-Affective

Facial Coding

Facial Expressions

Facial Action Coding System

Metrics

Analysis

Applications

Galvanic Skin Response

Devices

Analysing GSR Signals

Metrics

Applications

Biometrics — Applications

- How Advertising Works

- Advertising Analytics

- Packaging

- Biometrics

- Marketing Education

- Is Marketing Education Fluffy and Weak?

- How to Choose the Right Marketing Simulator

- Self-Learners: Experiential Learning to Adapt to the New Age of Marketing

- Negotiation Skills Training for Retailers, Marketers, Trade Marketers and Category Managers

- Simulators becoming essential Training Platforms

- What they SHOULD TEACH at Business Schools

- Experiential Learning through Marketing Simulators

-

MarketingMind

Biometrics

Biometrics

Eye Tracking

How it Works

Devices

Metrics

Fixations and Saccades

Heat Maps

Pupillometry

Eye Language

Applications

Electroencephalography

Frequency-based Analysis

Metrics

Approach-Avoidance

Cognitive-Affective

Facial Coding

Facial Expressions

Facial Action Coding System

Metrics

Analysis

Applications

Galvanic Skin Response

Devices

Analysing GSR Signals

Metrics

Applications

Biometrics — Applications

- How Advertising Works

- Advertising Analytics

- Packaging

- Biometrics

- Marketing Education

- Is Marketing Education Fluffy and Weak?

- How to Choose the Right Marketing Simulator

- Self-Learners: Experiential Learning to Adapt to the New Age of Marketing

- Negotiation Skills Training for Retailers, Marketers, Trade Marketers and Category Managers

- Simulators becoming essential Training Platforms

- What they SHOULD TEACH at Business Schools

- Experiential Learning through Marketing Simulators

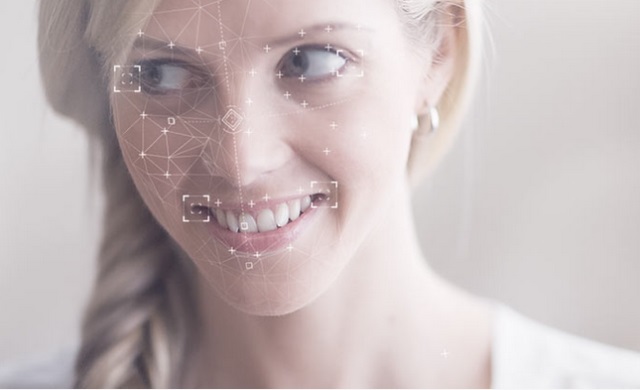

Facial Coding

Facial expressions are indeed the most eloquent form of body language, conveying a wealth of information about the emotions of the person expressing them. These expressions tend to be universally recognized across different cultures, making facial coding a powerful tool for measuring emotional responses to advertising and other visual content.

Dating back to Charles Darwin’s ground-breaking theories, the language of the emotions forms the basis of facial coding. Darwin suggested that facial expressions were innate and common to humans and other mammals.

In the 1970s, Paul Ekman’s studies further corroborated this theory.

He defined six basic facial expressions — anger, happiness, surprise, disgust, sadness and fear — in terms of facial muscle movements. Subsequently, he and Wallace Friesen created the Facial Action Coding System (FACS) that maps the coordinates of the face to the muscle movements associated with the key emotions.

Facial coding is the process of quantifying emotions by tracking and analysing facial expressions. Recorded online through web cameras, consumers’ expressions are analysed by computer algorithms to reveal their emotions.

Today, thanks to advances in information technology, facial coding is widely adopted by marketers and researchers for measuring emotions in advertising. Computer algorithms record and analyse expressions formed by facial features such as eyes, mouth and eyebrows, detecting even the tiniest movements of facial muscles to reveal a range of emotions. Many of these responses are so fleeting that consumers may not even remember them, let alone be able to objectively report them.

These real-time insights into viewers’ spontaneous, unfiltered reactions to visual content yield a continuous, flow of emotional and cognitive metrics, which can be used to optimize marketing strategies and enhance the effectiveness of advertising campaigns.

Some of the well-known service providers in this field include EyeSee, Emotient (which was bought by Apple in 2016), Affdex, Realeyes and Kairos. These companies use cloud-based solutions and advanced algorithms to measure a range of emotions and facial expressions, providing marketers and researchers with a continuous flow of emotional and cognitive metrics.

Facial Expressions

Facial expressions are formed by the movement of 43 facial muscles, which are almost entirely innervated by the facial nerve, aka the seventh cranial nerve. These muscles are attached to either a bone and facial tissue or just facial tissue.

Facial expressions can appear intentionally or actively, as when putting on a forced smile, or involuntarily, for instance, laughing at a clown.

The facial nerve, which emerges from the brainstem, controls involuntary and spontaneous expressions. Intentional facial expressions on the other hand, are controlled by a different part of the brain, the motor cortex. This is why a fake smile does not appear or feel the same as a genuine smile, it does not reach the eyes.

Facial expressions can be categorized into three types: macro expressions, micro expressions and subtle expressions. Macro expressions last up to 4 seconds and are obvious to the naked eye. Micro expressions, on the other hand, last only a fraction of a second, and are harder to detect. They appear when the subject is either deliberately or unconsciously concealing a feeling.

Subtle expressions are associated with the intensity of the emotion, not the duration. They emerge either at the onset of an emotion, or when the emotional response is of low intensity.

While facial coding can detect macro expressions, it is unable to capture finer micro expressions or the subtle facial expressions where the underlying musculature is not active enough to move the skin.

An alternative technique, Facial Electromyography (fEMG), can detect these finer movements by measuring the activation of individual muscles. It has much higher temporal resolution, which makes it ideal for recording subtle, fleeting expressions. However, since it is not practical to place more than one or two electrodes on the face, the range of expressions fEMG can track is limited. The additional hardware also makes it much less versatile than facial coding, for large scale consumer research studies.

Facial Action Coding System (FACS)

Facial Action Coding System (FACS) is a method of measuring facial expressions and describing observable facial movements. It breaks down facial expressions into elementary components of muscle movement called Action Units (AUs).

Labelled as AU0, AU1, AU2 etc., AUs correspond to individual muscles or muscle groups. They combine in different ways to form facial expressions. The analysis of the AUs of a facial image, therefore, leads to the detection of the expression on the face.

Automated facial coding (AFC) powered by machine learning algorithms and webcams, has become popular across numerous sectors, including marketing analytics. It typically involves a 3-step process:

- Face detection: When you take a photo with a camera or a smartphone, you may notice boxes framing the faces of the individuals in the photo. These boxes are appearing because your camera is using face detection algorithms. AFC uses the same technology to detect faces.

- Facial landmark detection (Exhibit 15.19): AFC then detects facial landmarks, such as eyes and eye corners, brows, mouth corners, and nose tip, and creates a simplified face model that matches the actual face, but only includes the features required for coding.

- Coding: Machine learning algorithms analyse the facial landmarks and translate them into action unit codes. The combinations of AUs are then statistically interpreted to yield metrics for facial expressions.

Metrics

Facial action coding systems typically capture a wide range metrics and variables, such as the following :

- Emotions: joy, anger, surprise, fear, contempt, sadness and disgust.

- Facial expressions: attention, brow furrow, brow raise, cheek raise, chin raise, dimpler, eye closure, eye widen, inner brow raise, jaw drop, lid tighten, lip corner depressor, lip press, lip pucker, lip stretch, lip suck, mouth open, nose wrinkle, smile, smirk, upper lip raise.

- Emoji Expressions: laughing, smiley, relaxed, wink, kissing, stuck out tongue, stuck out tongue and winking eye, scream, flushed, smirk, disappointed, rage, neutral.

- Interocular distance: distance between the two outer eye corners.

- Engagement: emotional engagement or expressiveness. This is computed as a weighted sum of a set of facial expressions.

- Sentiment valence: positive, negative and neutral sentiments. Valence metric likelihood is calculated based on a set of observed facial expressions.

Emotion, expression and emoji metrics are probabilistic measures. The scores ranging from 0 to 100 indicate the likelihood of a specific emotion or expression.

International Affective Picture System (IAPS)

The International Affective Picture System (IAPS) is a database of around 1,000 standardized colour photographs that have been rated on their emotional content. It was designed specifically for emotion and attention research and is widely used in both academic and commercial research. Access to the database is restricted to academics only.

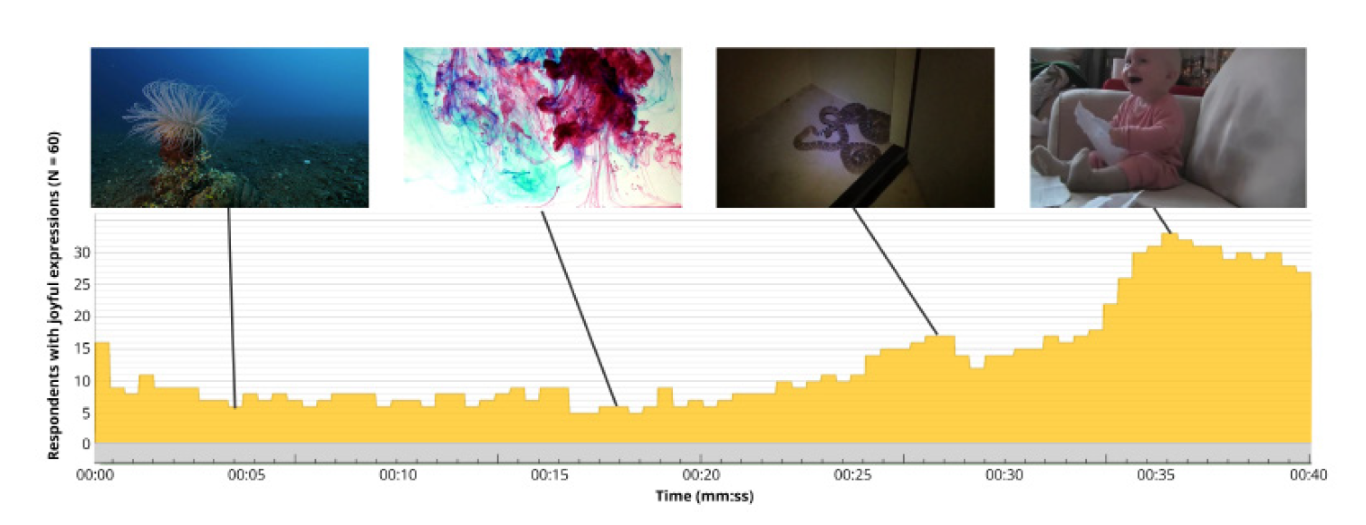

Analysis

The input for the analysis of facial expressions is essentially a video feed, either from a laptop, tablet, phone, GoPro, or standalone webcam.

The video is split into several short intervals or epochs (for instance, 1 second each), and a median facial expression score is computed for each respondent, over each epoch, based on a signal threshold algorithm. Respondents are counted if their score exceeds the threshold level.

This framework permits easy quantification and aggregation of the data. For instance, consider Exhibit 15.20, pertaining to the analysis of a video. It depicts the number of respondents with joyful expressions over the course of the video.

A typical analysis would comprise a series of similar charts relating to different emotions, facial expressions, as well as metrics pertaining to engagement and sentiment valence.

The information is auto-generated and is easy to interpret.

Applications

The potential of facial coding technology is huge considering that it is so easy to implement (webcam-based, no need for controlled location), easy to deploy, scalable, affordable, and since the information is easy to interpret and visualize.

As people around the world watch TV or gaze at their computer screens, marketers might be staring right back, tracking their expressions and analysing their emotions.

BBC, an early adopter, uses webcams and facial coding technology, to track faces as people watch show trailers, to see what kinds of emotions the trailers produced. The broadcasting house has also used the technology to study participant’s reactions to TV programs.

Research firms, GfK’s EMO Scan for instance, use consumers’ own webcams, with their permission, to track their facial expression in real time as they view advertising.

One of the limitations is that some facial expressions vary across cultures, individuals, and even demographics, such as age. Some individuals are expressive, others are impassive. This variance in facial muscle activity is why baselining is often recommended for 5 to 10 seconds at the start of a session.

Due to these reasons, it is problematic to measure the intensity of emotional expressions across different stimuli, individuals or cultures. So, while computer-based facial coding reveals the valence (positive/negative) and class of emotion, it cannot accurately assess emotional arousal (intensity).

One should consider using it in combination with other biometric technologies, such as galvanic skin response, that are able to capture emotional arousal.

Previous Next

Use the Search Bar to find content on MarketingMind.

Contact | Privacy Statement | Disclaimer: Opinions and views expressed on www.ashokcharan.com are the author’s personal views, and do not represent the official views of the National University of Singapore (NUS) or the NUS Business School | © Copyright 2013-2026 www.ashokcharan.com. All Rights Reserved.