-

Biometrics

Biometrics

Eye Tracking

How it Works

Devices

Metrics

Fixations and Saccades

Heat Maps

Pupillometry

Eye Language

Applications

Electroencephalography

Frequency-based Analysis

Metrics

Approach-Avoidance

Cognitive-Affective

Facial Coding

Facial Expressions

Facial Action Coding System

Metrics

Analysis

Applications

Galvanic Skin Response

Devices

Analysing GSR Signals

Metrics

Applications

Biometrics — Applications

- How Advertising Works

- Advertising Analytics

- Packaging

- Biometrics

- Marketing Education

- Is Marketing Education Fluffy and Weak?

- How to Choose the Right Marketing Simulator

- Self-Learners: Experiential Learning to Adapt to the New Age of Marketing

- Negotiation Skills Training for Retailers, Marketers, Trade Marketers and Category Managers

- Simulators becoming essential Training Platforms

- What they SHOULD TEACH at Business Schools

- Experiential Learning through Marketing Simulators

-

MarketingMind

Biometrics

Biometrics

Eye Tracking

How it Works

Devices

Metrics

Fixations and Saccades

Heat Maps

Pupillometry

Eye Language

Applications

Electroencephalography

Frequency-based Analysis

Metrics

Approach-Avoidance

Cognitive-Affective

Facial Coding

Facial Expressions

Facial Action Coding System

Metrics

Analysis

Applications

Galvanic Skin Response

Devices

Analysing GSR Signals

Metrics

Applications

Biometrics — Applications

- How Advertising Works

- Advertising Analytics

- Packaging

- Biometrics

- Marketing Education

- Is Marketing Education Fluffy and Weak?

- How to Choose the Right Marketing Simulator

- Self-Learners: Experiential Learning to Adapt to the New Age of Marketing

- Negotiation Skills Training for Retailers, Marketers, Trade Marketers and Category Managers

- Simulators becoming essential Training Platforms

- What they SHOULD TEACH at Business Schools

- Experiential Learning through Marketing Simulators

Facial Coding Metrics

Facial action coding systems typically capture a wide range metrics and variables, such as the following :

- Emotions: joy, anger, surprise, fear, contempt, sadness and disgust.

- Facial expressions: attention, brow furrow, brow raise, cheek raise, chin raise, dimpler, eye closure, eye widen, inner brow raise, jaw drop, lid tighten, lip corner depressor, lip press, lip pucker, lip stretch, lip suck, mouth open, nose wrinkle, smile, smirk, upper lip raise.

- Emoji Expressions: laughing, smiley, relaxed, wink, kissing, stuck out tongue, stuck out tongue and winking eye, scream, flushed, smirk, disappointed, rage, neutral.

- Interocular distance: distance between the two outer eye corners.

- Engagement: emotional engagement or expressiveness. This is computed as a weighted sum of a set of facial expressions.

- Sentiment valence: positive, negative and neutral sentiments. Valence metric likelihood is calculated based on a set of observed facial expressions.

Emotion, expression and emoji metrics are probabilistic measures. The scores ranging from 0 to 100 indicate the likelihood of a specific emotion or expression.

International Affective Picture System (IAPS)

The International Affective Picture System (IAPS) is a database of around 1,000 standardized colour photographs that have been rated on their emotional content. It was designed specifically for emotion and attention research and is widely used in both academic and commercial research. Access to the database is restricted to academics only.

Analysis

The input for the analysis of facial expressions is essentially a video feed, either from a laptop, tablet, phone, GoPro, or standalone webcam.

The video is split into several short intervals or epochs (for instance, 1 second each), and a median facial expression score is computed for each respondent, over each epoch, based on a signal threshold algorithm. Respondents are counted if their score exceeds the threshold level.

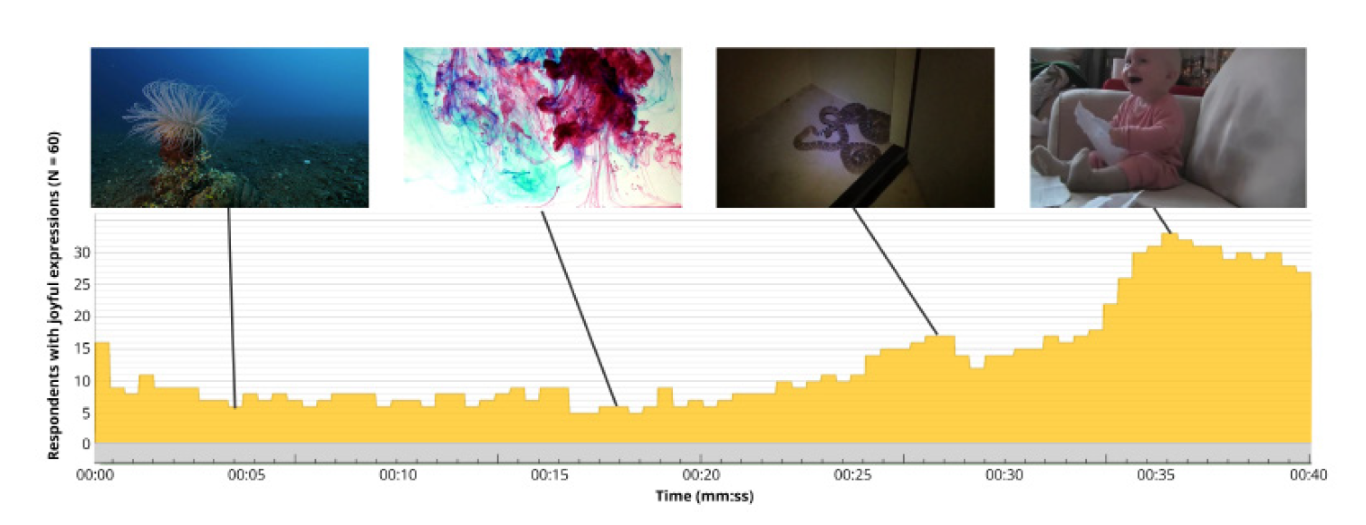

This framework permits easy quantification and aggregation of the data. For instance, consider Exhibit 15.20, pertaining to the analysis of a video. It depicts the number of respondents with joyful expressions over the course of the video.

A typical analysis would comprise a series of similar charts relating to different emotions, facial expressions, as well as metrics pertaining to engagement and sentiment valence.

The information is auto-generated and is easy to interpret.

Applications

The potential of facial coding technology is huge considering that it is so easy to implement (webcam-based, no need for controlled location), easy to deploy, scalable, affordable, and since the information is easy to interpret and visualize.

As people around the world watch TV or gaze at their computer screens, marketers might be staring right back, tracking their expressions and analysing their emotions.

BBC, an early adopter, uses webcams and facial coding technology, to track faces as people watch show trailers, to see what kinds of emotions the trailers produced. The broadcasting house has also used the technology to study participant’s reactions to TV programs.

Research firms, GfK’s EMO Scan for instance, use consumers’ own webcams, with their permission, to track their facial expression in real time as they view advertising.

One of the limitations is that some facial expressions vary across cultures, individuals, and even demographics, such as age. Some individuals are expressive, others are impassive. This variance in facial muscle activity is why baselining is often recommended for 5 to 10 seconds at the start of a session.

Due to these reasons, it is problematic to measure the intensity of emotional expressions across different stimuli, individuals or cultures. So, while computer-based facial coding reveals the valence (positive/negative) and class of emotion, it cannot accurately assess emotional arousal (intensity).

One should consider using it in combination with other biometric technologies, such as galvanic skin response, that are able to capture emotional arousal.

Previous Next

Use the Search Bar to find content on MarketingMind.

Contact | Privacy Statement | Disclaimer: Opinions and views expressed on www.ashokcharan.com are the author’s personal views, and do not represent the official views of the National University of Singapore (NUS) or the NUS Business School | © Copyright 2013-2026 www.ashokcharan.com. All Rights Reserved.